TECHNOLOGY DEEP DIVE

Global Metadata

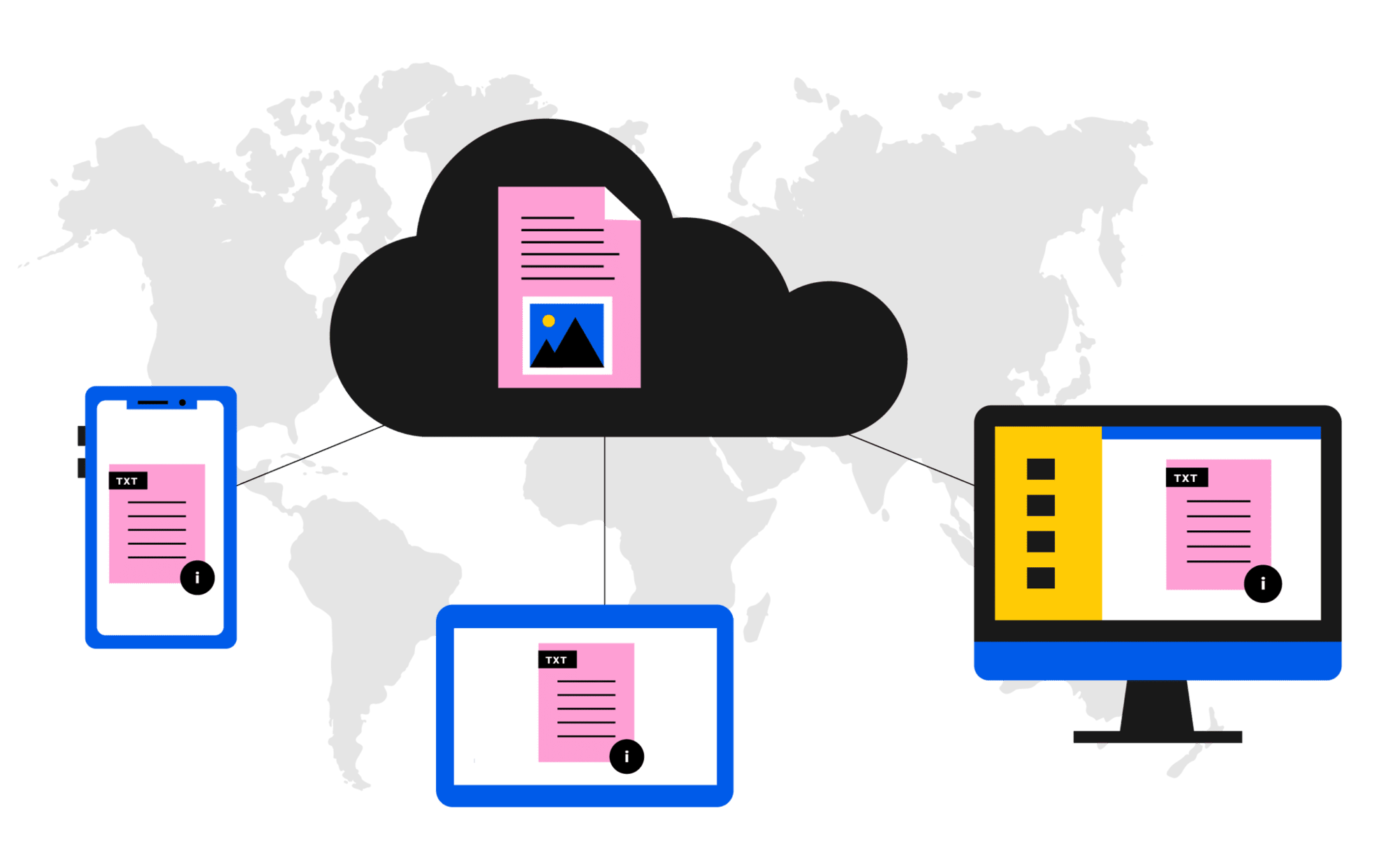

Metadata — data about data — is the fundamental building block for both Panzura's platforms. Panzura CloudFS leverages global metadata to enable its core function as a high-performance, distributed file system, while Panzura Symphony takes metadata to the next level, turning it into a rich, actionable asset for data governance, analytics, and AI.

Global Metadata in an Enterprise Hybrid Cloud File Platform

Metadata is what a file system uses to find and organize data and it’s the foundation for performance in Panzura's hybrid cloud global file platform, CloudFS.

Panzura CloudFS™ consolidates enterprise data in the cloud and facilitates performant cloud file operations for users and processes.

Every node in the file system has the metadata for the entire file system, locally. Since every node has all of the metadata, every location can read any data from any location. That means there is no need to duplicate or mirror files between sites – files are stored as a single source of truth in the cloud, and cached locally for high performance.

In an enterprise-grade distributed cloud file system, global metadata enables two key aspects to performance — local access speed and global synchronization speed.

Fast Local Access

CloudFS uses flash storage for fast metadata access and caching. Flash enables the system to handle random IOPS (Input/Output Operations Per Second) workloads extremely quickly, and to respond efficiently to sequential read requests when users try to open files. High IOPS and low latency are essential to file system performance.

Flash storage is about 100 times faster than legacy disk drives and provides up to 400 times more IOPS. So even on a traditional array, it makes sense to put metadata on flash where user requests will be served quickly. Every major enterprise storage solution uses flash for metadata and caching.

Fast Global Synchronization

If a user changes a file in one office, file metadata needs to update immediately in every other location, or your productivity can be negatively impacted. Panzura’s patented technology keeps metadata synchronized in real time, in every node, in every location so your entire team has an up-to-the-moment view of every file, whether they’re working from your head office, or from their home.

Learn more about how Panzura CloudFS uses metadata:

Actionable Metadata for Intelligent Data Operations

Panzura Symphony data services platform reframes metadata as an intelligent, strategic tool for data governance, lifecycle management, and AI. It can connect to a vast array of enterprise data repositories, from on-premises and cloud file and object storage to data lakes, to create a single global view over unstructured data. This metadata-aware platform can augment the basic metadata found in file systems as well as create a detailed, searchable metadata catalog of your entire unstructured data estate, extracting rich metadata that would otherwise be locked within files.

How Symphony uses this metadata:

-

Automate Data Movement and Lifecycle Management: Symphony brings order to sprawling, unstructured data. It does this by first scanning all data sources, whether on-premises or in the cloud. It extracts metadata tags, such as file size, last access date, file type, and owner. Using this information, IT and data leaders can create highly specific policies within Symphony. For example, a policy might state: “Any file of type

.logthat has not been accessed in over 90 days and is larger than 1GB should be automatically moved to a lower-cost archival tier, such as Amazon S3 Glacier.” Symphony then executes these policies automatically and transparently, ensuring data is always in the most cost-effective location without disrupting user workflows. It can even move data to tape, maintaining a searchable record of where the data is stored. -

Enhance Governance and Security: With its centralized metadata catalog, Symphony provides a single pane of glass for analyzing and managing data across heterogeneous storage environments. Instead of manually inspecting multiple file servers and cloud buckets, security and governance teams can use Symphony to conduct exabyte-scale audits. The platform's metadata analysis can identify and remediate permission sprawl by flagging files with overly broad access rights or files that still belong to off-boarded employees. It provides a granular audit trail of who accessed which files and when, helping organizations ensure compliance with regulations like GDPR or HIPAA by proving where sensitive data resides and who has access to it.

-

Power AI and Analytics Workflows: One of the biggest challenges for AI and analytics is the sheer volume of data. Moving petabytes of data to a processing engine is slow, costly, and inefficient. Symphony solves this problem by creating a metadata proxy of your entire data estate. This proxy is a comprehensive, searchable catalog that is a tiny fraction of the size of the original files. AI models and analytics tools can then interact with this lightweight proxy, allowing them to quickly identify relevant data without ever having to move the full, massive files. For example, an AI model can query the metadata catalog to find all files related to a specific project and then analyze their attributes (e.g., file type, creation date) before a single file is moved. This dramatically streamlines AI workflows, reduces network traffic and storage demands, and accelerates time-to-insight.