- Products

- OUR PLATFORM

The Panzura Data Management PlatformModernize your file data storage infrastructure and improve security.

The Panzura Data Management PlatformModernize your file data storage infrastructure and improve security.

-

Discover why modern data leaders prefer the Panzura Data Management Platform

- OUR PRODUCTS & OFFERINGS

Panzura CloudFSSimplify & secure your data storage with a single authoritative source.

Panzura CloudFSSimplify & secure your data storage with a single authoritative source.

Panzura Detect and RescueAdd ransomware resilience with active detection & alerts, expert support.

Panzura Detect and RescueAdd ransomware resilience with active detection & alerts, expert support.

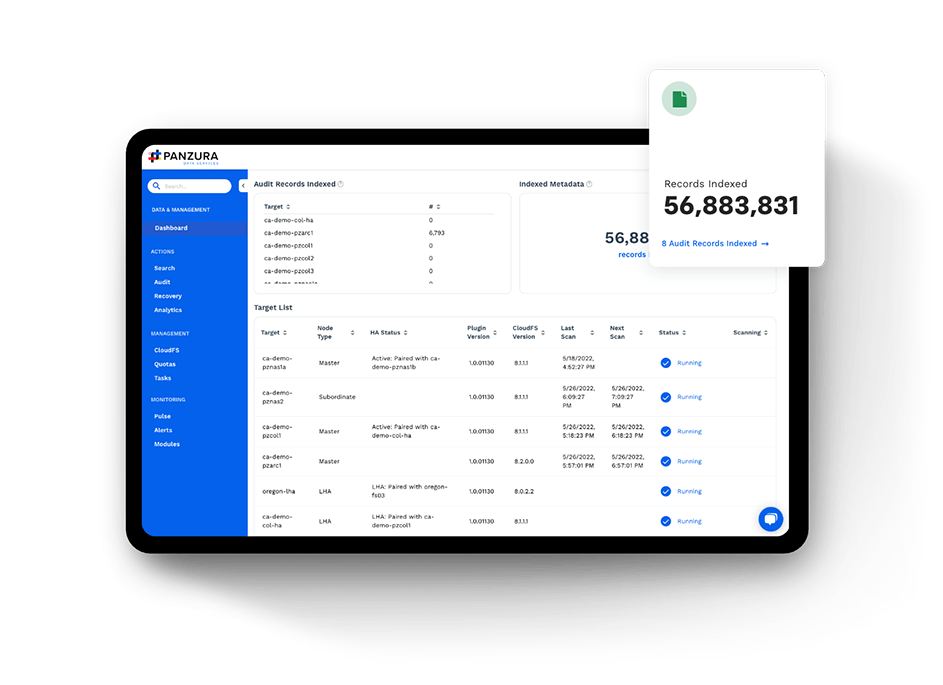

Panzura Data ServicesGet visibility, governance & analytics in a unified SaaS dashboard.

Panzura Data ServicesGet visibility, governance & analytics in a unified SaaS dashboard.

Panzura EdgeImprove data access & power collaboration with integrated tools.

Panzura EdgeImprove data access & power collaboration with integrated tools.

- Solutions

- SOLUTIONS

- Banking, Financial Services & InsuranceDelivering financial value by driving digital transformation

- Architecture, Engineering & ConstructionImproving time-to-value by securing data & enhancing cross-site collaboration

- Healthcare & Life SciencesProtecting patient data, improving outcomes & powering research

- Media & EntertainmentPowering secure global collaboration & reducing exponential data growth

- ManufacturingStreamlining workflows & improving efficiency to accelerate time to market

- Public SectorProviding military-grade security & enabling advanced data compliance

- Resources

- Support

- CUSTOMER SUPPORT

- Global ServicesEnjoy streamlined data migration & world-class customer service.

- Service HubYou deserve the best service the industry has to offer. Get it here.

- Knowledge BaseLearn everything you need to know about Panzura products & services.

- Partner PortalAccess tools & resources designed exclusively for our channel partners.

- Support Informationsupport@panzura.com

- About

- ABOUT PANZURA

- Our CompanyWe charted a new path to the top — and it’s a hell of a story!

- Leadership TeamMeet mavericks, motivators & masterminds who drive our success.

- CareersWe’re looking for the best & brightest. If that’s you, let us know.

- Press RoomKeep up with our latest news, insights & company updates.

Radically simplify

and secure your

unstructured data.

Panzura is your award-winning, hybrid, multi-cloud, end-to-end data management platform. We provide unstructured data mobility, access, security, and control through a single, global file system—native to the cloud—to fuel your business innovation.

Panzura Named a Representative Vendor in the 2024 Gartner® Market Guide for Hybrid Cloud Storage

Introducing the world's most powerful and secure hybrid cloud data management platform.

The Panzura Data Management Platform is a unified platform that simplifies data complexity while boosting scalability, stability, security, and performance.

What does this mean for you? In one word… Everything.

Panzura solves all your most critical data management challenges.

It means you have better security, visibility, and control over your data — and therefore your business.

1/3

The TCO of other solutions

5000%

Faster than the competition

75%

Reduction in data growth

Security Panzura offers the strongest data protection of any platform anywhere.

How this benefits you

- Find, track & restore millions of files in seconds.

- Isolate threats with DoD-grade, air-gapped data protection.

- Make your data immutable to any cyber threat or bad actor.

- Monitor suspicious activity with user behavior auditing & file accessibility tracking.

Visibility Panzura gives you full visibility of your users, data & usage.

How it benefits you

- Identify threats in real-time & neutralize them quickly.

- Analyze all of your unstructured data in real-time.

- Empower your teams to collaborate globally without latency or data corruption.

- Save 1000s of IT hours with fast, efficient search functionality.

Control Panzura lets you put your data to work.

How it benefits you

- Create comprehensive workloads in any public cloud without sacrificing security or egressing data.

- Leverage actionable intelligence to increase time-to-value from internal data.

- Simplify complex processes using our efficient, highly-secure single platform.

- Eliminate duplicated data & locate missing files in seconds.

Your business is unique. Shouldn’t you expect the same from your data management platform?

We work with the smartest, most innovative players in multiple industries, providing data management solutions that are tailored for your specific industry needs.

Don't take our word for it. Here’s what our customers are saying.

Every single country has Panzura running, and they are globally working together. It’s a fundamental because, for the majority of time over the last 20 years, those regions have been in silos and we had to shuffle content back and forth.”

— Walt Disney Corporation

With Panzura, we deployed one global cloud file system across all sites, enabling engineers and designers to collaborate as one global team, dramatically increasing productivity and improving our bottom line.”

— Afry

Panzura has been solving enterprise cloud data issues for over a decade and they know how to work with sports teams’ critical data. We are excited that they will be solving our cloud data issues for years to come. We found the partner we’d been looking for.”

— New Orleans Saints

We initiated a cloud-first strategy and selected Panzura to consolidate our traditional storage environment, centralize and manage unstructured data in the cloud, and reduce our operating cost.”

— Fluor

We run on AWS cloud and wanted a solution supporting multi-cloud architecture. With Panzura, our cloud data environments are running flawlessly. And getting data organized and accessible is worth its weight in gold!”

— Goop

The Panzura solution was really a game changer for us. Essentially what we’ve done is create a global organization that is now virtually working in the same room.”

— Eric Hanson, VP of IT and Business Optimization, Milwaukee

We have kick-ass partners.

We’re already working with the companies you know and trust. Our solutions are integrated with theirs, and we coordinate our work to ensure you always get the best possible outcomes.