Enterprise data sharing should not make you want to yell at a cloud. There’s no reason to put up with legacy file storage. In fact, there are many reasons NOT to put up with it.

- First off, it’s outdated and doesn’t play nicely with the cloud.

- Second of all, there is no need to make even one copy of data anymore...

You can make enterprise data accessible and durable without making any copies

Legacy data, think NetApp and Isilon, was created for single sites (no, not “singles sites”). The only way users can collaborate on files between sites or to meet business Recovery Point Objectives is through scheduled replication.

That’s like scheduling increased cost into every project. You might as well schedule a paper cut. Ouch.

It is a much more costly data bottleneck than normal - easily 3x the storage investment. It adds up quickly. Here’s how:

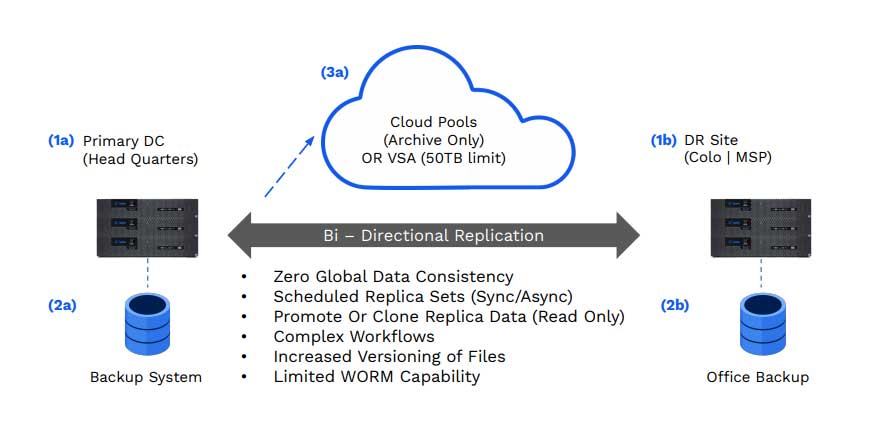

Traditional Network-Attached Storage deployments can create additional cost and complexity for even a simple two-site deployment.

Check this out. Over to the left is (1a) - a primary datacenter holding files before replicating them to a secondary site (that's 1b), so they are easily accessible to users from multiple locations. That's twice the coat already, for all users to easily collaborate on projects remotely or recover the data if it’s lost.

In addition to this initial copy, enterprises are required to backup all their data (figure 2a) in case of data corruption or a malicious attack, like ransomware. To backup and store this data offsite (figure 2b), you’d have to pay your initial investment all over again!

3 times the initial cost? This figure gives us all chills… Costs aren’t just adding up - they’re multiplying !!!

Businesses also need to integrate their applications with cloud capabilities. Most storage solutions didn’t include this in the original design, so it has no compatibility with the cloud. The applications generating data won’t be able to work with cloud storage, unless they get re-written of course…

Instead, S3-compatible storage buckets are frequently used as an archive, as shown in figure (3a), not using cloud native service at all. This deployment limits the enterprise’s ability to migrate critical file storage and associated workflows to the cloud, forcing them to pay for multiple copies in the process!!!!

No More Copies

Panzura’s intelligent hybrid cloud storage approach allows enterprises to make files immediately consistent across sites, providing enterprise-grade durability without replicating files for backup and disaster recovery.

Figure (4) shows a global file system that, instead of replicating files across locations, uses public, private or dark cloud storage as a single authoritative data source. Virtual machines at the edge (on-prem or in the cloud) overcome latency by holding the file system’s metadata as well as intelligently caching the most frequently used files to achieve local-feeling performance.

User changes made at the edge are then synced with the cloud store and with every other location (after being de-duplicated, compressed and encrypted). All locations sync simultaneously.

This ensures up-to-the-minute file consistency for all locations in the file system, with immediate consistency achieved through peer-to-peer connections whenever users open files.

A New, Better Approach to Data Durability and Recovery

Panzura's hybrid cloud storage smarts use a “Write Once, Read Many” approach, storing unstructured data in immutable data blocks that cannot be modified, encrypted or overwritten.

As users and processes change these files at the edge, those edits are stored as new immutable data blocks. The file system pointers are updated with every change, to reflect which data blocks are required to form files at any given point in time.

Panzura restores data by taking lightweight snapshots of data at configurable intervals (figure 5), providing timely captures of the data blocks used by every file.

The data blocks themselves cannot be overwritten, so snapshots allow single files, folders or the complete file system to be restored, with lightning speed.

Not only is this process faster and far more precise than restoring from traditional backups, cloud providers themselves replicate data across cloud regions or buckets to provide up to 13 9s of durability.

Even Cal Ripken Jr. would be jealous.

This exceeds the durability that many organizations can achieve using even multiple copies of data, and means that IT teams no longer need to maintain separate backup processes to replicate data. Instead, they can rely on the inherent durability provided by Panzura and the cloud object store itself.

Snapshot frequency and retention can be configured as required. For example, an organization may choose to capture hourly snapshots and keep them for a period of 30 days, along with weekly snapshots kept for 90 days, and monthly snapshots kept indefinitely.

Immutable Data Against Damage and Loss

The quantity and caliber of organizations willing to admit to being hit by ransomware and other cyber threats suggests that there is no complete defense, yet.

By its nature, Panzura’s intelligent approach to data management makes organizations resilient against ransomware and other malware by preventing stored data from being encrypted, and restoring files, folders or the entire file system whenever required.

Additionally, the system slows ransomware attacks. Only frequently used files are cached at the edge, so as files and directories that are not cached are accessed, they need to be retrieved from cloud storage. This takes time.

In the event of a malware attack, data is written to object storage as new objects. These spikes in cloud traffic can trigger alerts and detect attacks earlier, reducing contamination and allowing for faster recovery.

*File deletion is subject to a secure erasure process, which cannot be run accidentally.

As Little as ⅓ the Total Cost of Ownership of Legacy Storage

The cloud offers tremendous potential for enterprises to reduce storage costs, improve productivity, increase data protection and reduce liability. Tapping that potential fully and effectively can provide significant competitive advantage while reducing both business and technological risk.

To date, enterprises trying to integrate cloud storage have built suboptimal solutions, creating a Frankenstein of different technologies from various vendors, many of which weren’t cloud compatible anyway. This approach fails to realize the full benefits of cloud storage while consuming precious IT resources in implementation and management.

Panzura lets organizations unify enterprise data, without the extra cost. And, both data and locations can scale as required by using cloud storage like it’s a data center, without sacrificing productivity or compromising existing workflows.

Let the Blade Runners deal with all of those replicants.